Posted on 20 April 2023.

Granting access to resources within AWS to a Kubernetes Workload is not too complicated if you know exactly what you’re doing and how components interact. Once you understand the mechanism properly, the possibilities are endless. Let’s deep-dive into this!

Granting access to AWS Resources

This could be because a Deployment you created wants to access data from a bucket, pushes images to an ECR, or even updates a DNS Zone.

Basically, we want a pod in our cluster to have permission to make authenticated calls to AWS, with the proper credentials. The general way of granting access to a resource in AWS is through IAM Roles, so our end-game here is for our Pod to be able to take actions using an IAM Role.

IAM Roles and Kubernetes

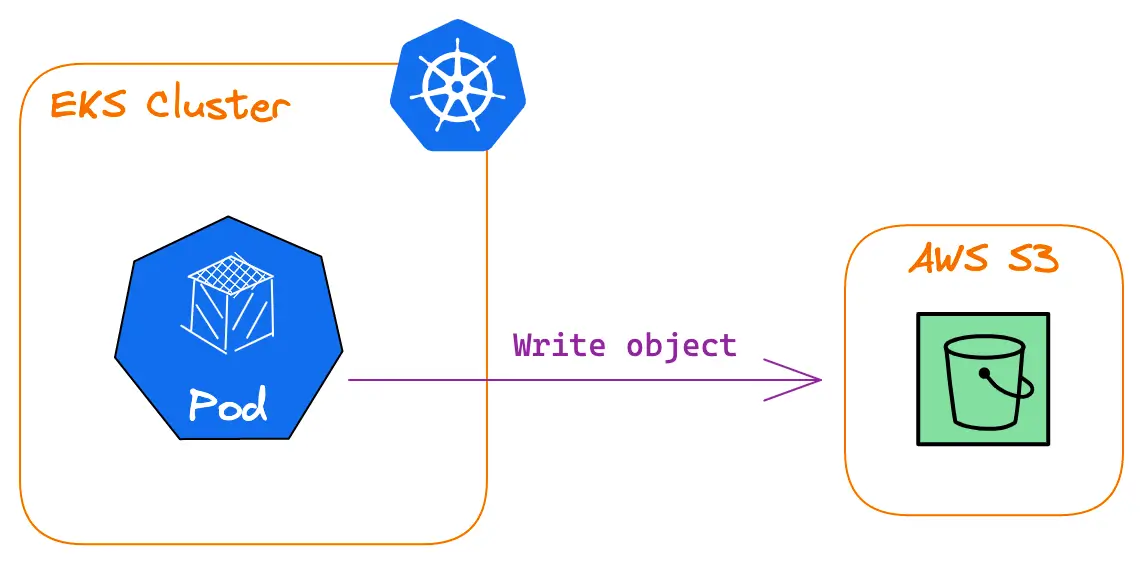

Let’s look at a very simple use case: you want your app, running in a Pod in Kubernetes, to write an object into an S3 bucket.

There are a couple of different ways to achieve this, here we will discuss the recommended AWS EKS way of doing it. In a later section of this article, we will discuss alternatives to this, so don’t hesitate to check these out if you’re interested.

Basically, you will need two things :

- An IAM Role with permissions to write objects to your S3 bucket

- A way for your pod to use this Role

The first item is a common AWS operation, so I will not go into much detail in this article. The second item however is not as straightforward as it may seem. The AWS documentation on how you can achieve this explains all setup steps pretty explicitly :

- Create an IAM OpenID Connect (OIDC) provider for your cluster

- Configure a Kubernetes ServiceAccount to assume an IAM Role

- Configure your workloads to use this ServiceAccount

However, they do not explain what exactly happens at each step of the process, or why you need these steps.

Why you need an IAM OpenID Connect provider

An IAM Role is an entity that is usually not associated with an individual or a precise application. It is usually assumed by a user, which means that temporary credentials are generated for the user to have the same permissions the Role has. Allowing a given user to assume a role is done through a simple IAM policy that looks like this :

{

"Action": "sts:AssumeRole",

"Effect": "Allow",

"Principal": { "AWS": "arn:aws:iam:111122223333:john" }

}This policy, when placed on a given Role, allows user john to assume this Role. But in our case, we do not want this Role to be assumed by john, our Pod should be able to assume this Role.

Maybe something like this could work?

{

"Action": "sts:AssumeRole",

"Effect": "Allow",

"Principal": { "Kubernetes": "pod-in-cluster-292cc7" }

}You guessed it: it does not!

What we need to get this to work is to have a way of granting an “identity” the permissions to assume our Role, and then use this “identity” in our Pod. What is this “identity” here? Well in Kubernetes, the common resource for managing the identity of an app is the ServiceAccount. Maybe we can use that?

It turns out we can!

Kubernetes allows setting up an OIDC provider with which Kubernetes identities can be bound using its Service Account issuer discovery feature (available since v1.21). On top of that, AWS allows using an OIDC identity provider as an IAM identity provider. By combining these two features, we can basically use Kubernetes ServiceAccounts as AWS IAM entities!

You could basically use any OIDC provider that supports the OIDC Discovery Spec here, and achieve this. You just need to reference this OIDC provider in AWS. You can then grant your ServiceAccount permissions to assume your Role with a policy like this one :

{

"Effect": "Allow",

"Principal": {

"Federated": "arn:aws:iam::111122223333:oidc-provider/server.example.com"

},

"Action": "sts:AssumeRoleWithWebIdentity",

"Condition": {

"StringEquals": {

"server.example.com:sub": "system:serviceaccount:great-namespace:great-serviceaccount",

"server.example.com:aud": "sts.amazonaws.com"

}

}

}What exactly happens here? Let’s look at it bit by bit :

"Principal": {

"Federated": "arn:aws:iam::111122223333:oidc-provider/server.example.com"

},This policy applies to identities federated by your OIDC provider.

"Effect": "Allow",

"Action": "sts:AssumeRoleWithWebIdentity",You allow these identities to assume your Role. Note that the action is not the traditional sts:AssumeRole here, but sts:AssumeRoleWithWebIdentity.

"Condition": {

"StringEquals": {

"server.example.com:sub": "system:serviceaccount:great-namespace:great-serviceaccount",

"server.example.com:aud": "sts.amazonaws.com"

}

}You want this policy to apply only when the sub (subject) of your identity is the great-serviceaccount ServiceAccount in the great-namespace namespace.

Also, it should only apply when the token used for this identity has been generated for talking with the sts.amazonaws.com API (i.e. the token aud for the audience matches this).

It is worth noting that a very quick and simple way of setting this all up is by using AWS EKS integrated OIDC provider feature, instead of relying on a third-party one. It can make it very easy to get this up and running quickly.

Assuming the IAM Role in a Pod

Thanks to the OIDC provider, we managed to grant our great-serviceaccount permissions to assume a Role! However, because it is allowed to does not mean it will do it.

Luckily, AWS EKS allows for setting an annotation on ServiceAccounts to indicate which Role to assume:

apiVersion: v1

kind: ServiceAccount

metadata:

name: great-serviceaccount

namespace: great-namespace

annotations:

eks.amazonaws.com/role-arn: "arn:aws:iam::111122223333:role/s3-writer"Here we tell our ServiceAccount that we want it to assume the s3-writer Role.

Using this ServiceAccount in a pod is then just a matter of setting the serviceAccountName field in the Pod’s spec.

apiVersion: v1

kind: Pod

metadata:

name: great-pod

namespace: great-namespace

spec:

serviceAccountName: great-serviceaccount

[...]The rest is magic, it “just works”. But why? Would the same work in a non-AWS Kubernetes cluster (on-premise for example)? Would I be able to use the eks.amazonaws.com/role-arn annotation in a different cluster?

The lazy answer is: no, it’s an AWS EKS feature.

The real answer however is a bit different:

- no, you cannot use it out of the box elsewhere. It is only shipped natively in AWS EKS.

- if you want to allow your Kubernetes cluster to integrate with AWS IAM, you can do it, with a fair bit of setup.

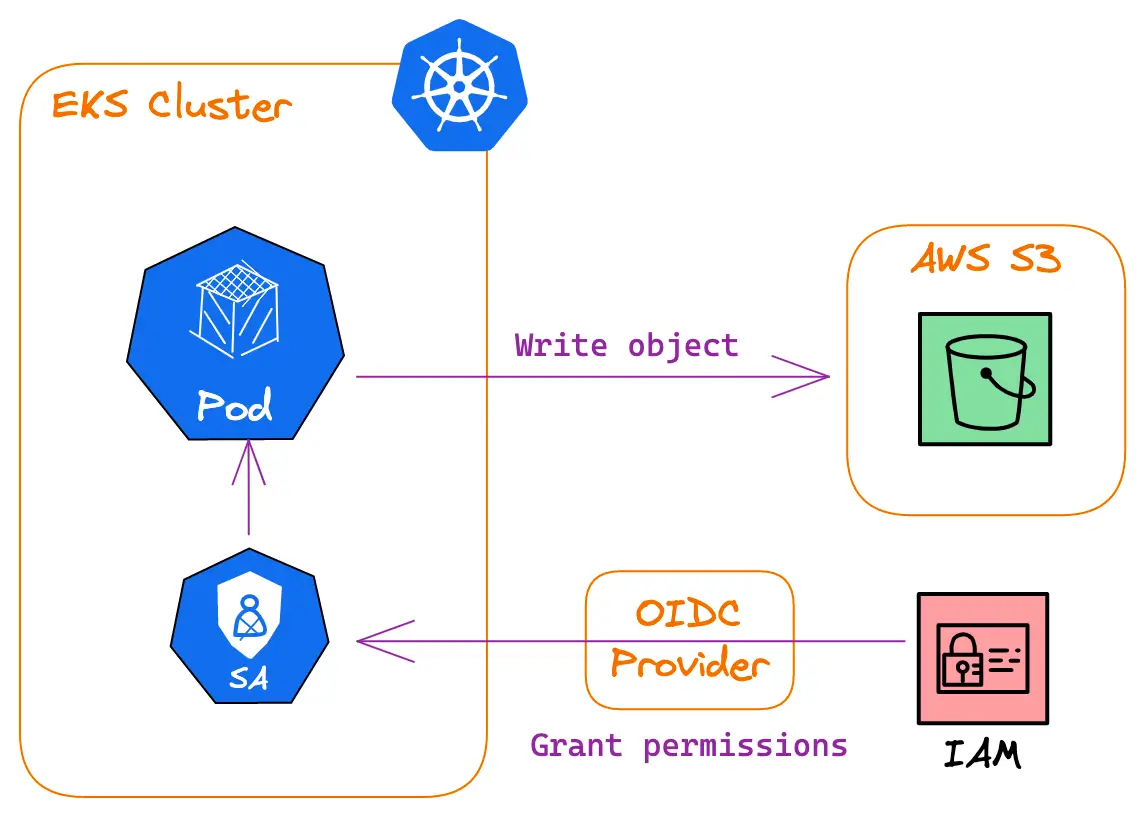

So how exactly does this “magic” happen? Let’s get back to our initial schema (I enriched it a bit with what we added since the beginning).

This view gives a great understanding of who does what, but unfortunately, we do not have all of the detail of what is going on here.

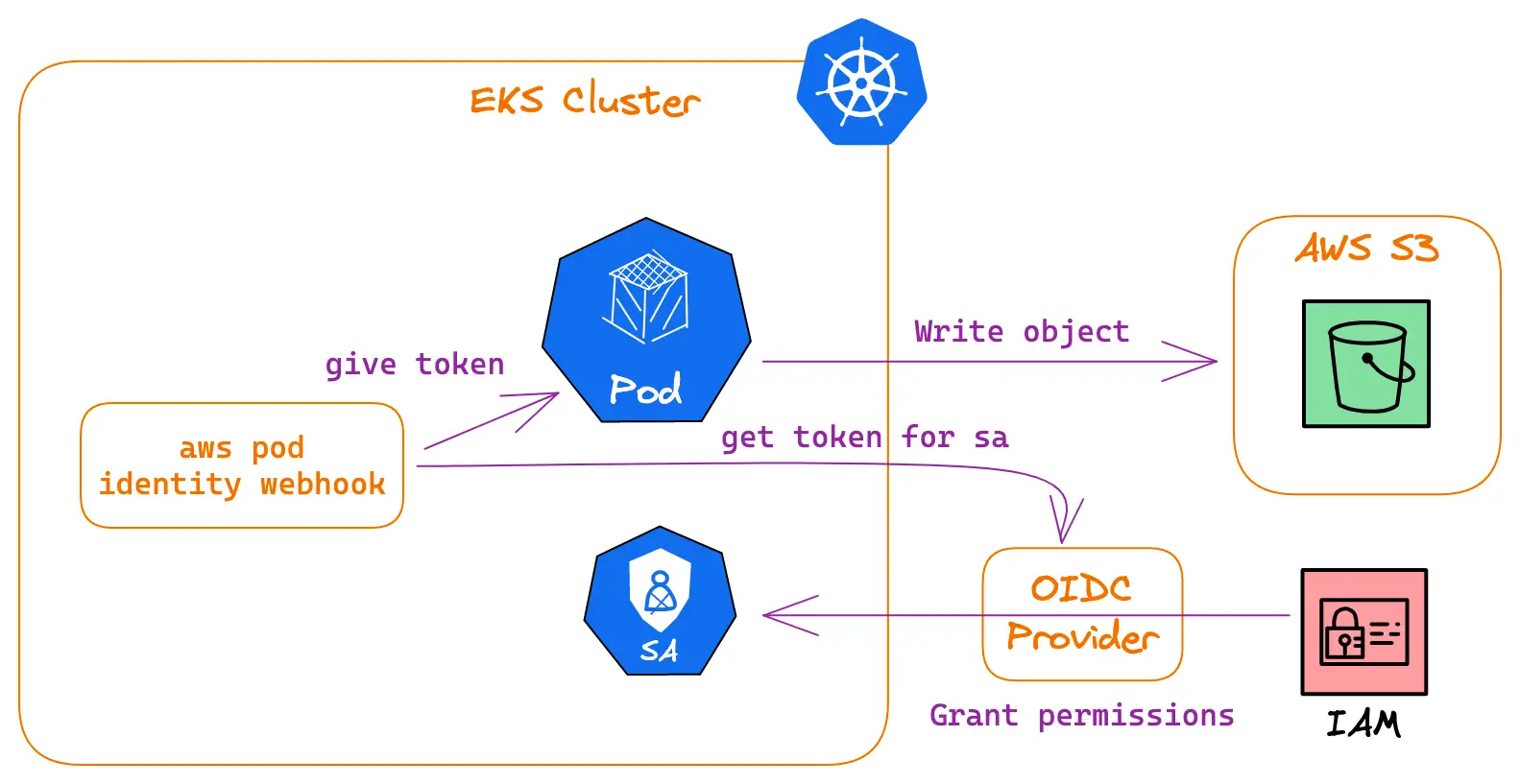

There is actually an additional component that intervenes in these mechanics: the AWS Pod Identity Webook.

What it does, is that it gets a token from the OIDC provider for the ServiceAccount and gives it to the Pod. It injects it into environment variables in the Pod so that the AWS SDK can easily find them.

For each new Pod that is created in the cluster, the webhook is called and alters the Pod spec if the attached ServiceAccount bears the eks.amazonaws.com/role-arn annotation. Here is a simplified example of what is added by the webhook (inspired by its docs) :

env:

- name: AWS_ROLE_ARN

value: "arn:aws:iam::111122223333:role/s3-writer"

- name: AWS_WEB_IDENTITY_TOKEN_FILE

value: "/var/run/secrets/eks.amazonaws.com/serviceaccount/token"

volumeMounts:

- mountPath: "/var/run/secrets/eks.amazonaws.com/serviceaccount/"

name: aws-token

volumes:

- name: aws-token

projected:

sources:

- serviceAccountToken:

audience: "sts.amazonaws.com"

path: tokenI do not go into detail about what this token actually is, what it contains, and what it looks like, so if you want to find out more about this (and how OIDC works) check out the Illustrated Guide to OAuth and OpenID Connect that Okta published a while back, I found it very instructive!

Alternative ways of granting access

There are a few other methods to grant access to AWS resources to a Pod running in Kubernetes that are worth mentioning. For the example, we will keep the same setup where a pod wants to write objects to an S3 bucket. Let’s quickly go over them.

Use an IAM User

To do that, you can create an AWS IAM User which has permission to write objects to S3. You can then export an AWS Key Pair for this user, and give it to your pod.

This is not ideal, because an AWS Key Pair does not expire automatically very fast. This means that if it leaks for any reason, a hacker can use it. With the OIDC method, your Pod only ever gets temporary short-lived credentials.

This also means that this AWS Key Pair needs to be handled like a secret: you need to store it in a safe place, like a secret manager. All this management adds to the operational load because it needs to be done for each Kubernetes Workload that requires access to AWS resources.

Use the Node’s Service Account

Technically, this is possible because by default pods can assume the Role with which the Kubernetes Nodes run by accessing the EC2 metadata server. This is not a real “feature”, and is more a side-effect of the default configuration. AWS Docs for EKS Best Practices explain how to Restrict access to the instance profile assigned to the worker node, in order to prevent Pods from using it.

This is not a good way to grant access to a pod, especially because it means any other pod running on the node will get the same permissions even if it should not. Please do not do this.

To sum this up, there are not so many alternatives and definitely no good ones.

Conclusion

We got an in-depth look in this article at how you can grant access to a Pod in Kubernetes to resources in AWS with IAM. This covers a scenario in which you would give access to a Workload running inside the cluster to resources outside of the cluster.

The opposite can be interesting as well: how do you grant access to an IAM entity (a user or a role) to resources within the cluster? Luckily for you, we have an article about managing EKS access and permissions that you can read through!