Posted on 23 February 2023, updated on 11 December 2023.

Do you need to test features on an existing environment? Or do you have a TLS certificate or FinOps constraints for your GitOps workflow?

Let's see how to use ArgoCD and Traefik to set up a header routing solution for an application in Kubernetes.

Installation of the environment

ℹ️ The sources of this article are in this Gitlab repository; I suggest you clone it.

In this article, we will implement a header routing solution, which is a way to route traffic from the HTTP request headers in addition to (or instead of) the "traditional" HTTP Host routing method.

There are a few prerequisites to implementing this method in Kubernetes. But the first of the prerequisites is to have a Kubernetes cluster.

If like me you don't have one, you can use kind (which allows you to deploy a simple kube cluster in docker): there is a predefined configuration in the repository that will save you time.

kind create cluster --config kind.yamlzThere are a lot of solutions to do automatic deployment and ingress in Kubernetes. Here we will use ArgoCD for the CD part and Traefik as Ingress Controller.

💡 Traefik is simple to configure, but we could replace it with Istio or the equivalent.

Let's start by installing Traefik. For that, we will start with the official Helm Chart and a basic configuration allowing us to name the ingressClass we will use (here ingressClass: "traefik):

helm upgrade --install --create-namespace --namespace=traefik \

--repo https://helm.traefik.io/traefik \

-f ./values/traefik.yaml traefik traefikNow let's install ArgoCD, still with the official Helm Chart, and a simple configuration that allows using the ingress class traefik for access to the dashboard (the file argocd.yaml must be modified to change the hostname):

helm upgrade --install --create-namespace --namespace=argocd \

--repo https://argoproj.github.io/argo-helm \

-f ./values/argocd.yaml argocd argo-cdThe argoCD Dashboard should now be accessible on the host defined in the values/argocd.yaml file with the admin account and the password retrieved from the command

kubectl -n argocd get secret argocd-initial-admin-secret \

-o jsonpath="{.data.password}" | base64 -d💡 To go further, we can manage Traefik and ArgoCD in ArgoCD so that they are automatically deployed in case of an update... But that's another topic 😀

Setting up Header Routing

From here, we will use ArgoCD to deploy our application. So we need to have a Git repository that will be used as a source by ArgoCD.

For this presentation, we will use the repository that we have already cloned (at the beginning of the article).

A Helm chart is already present in the charts/frontend repository: it is a simple chart, in which we will create a Deployment of the whoami image, a Service, and an IngressRoute (the equivalent of the Ingress for traefik).

You just have to modify the values.yaml file to change the host of the ingress:

nameOverride: ""

fullnameOverride: ""

ingress:

routes:

- match: "Host(`frontend.vcap.me`)" # 👈️ change this to your real domain nameIn order for ArgoCD to deploy our Helm Chart, we will create an Application resource.

In the repository, there is already an application in argocd/frontend.yaml, we will have to modify it to point to your repository:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: argocd

annotations:

argocd.argoproj.io/manifest-generate-paths: .

finalizers:

- resources-finalizer.argocd.argoproj.io

spec:

project: default

destination:

namespace: frontend

name: in-cluster

source:

path: charts/frontend

# 👇️ change this to your public repository

repoURL: git@gitlab.com:padok/argocd-app.git

targetRevision: main

helm:

valueFiles:

- "values.yaml"

syncPolicy:

automated:

selfHeal: true

prune: trueℹ️ If your repository is not public you will have to add the credentials in ArgoCD.

Now we commit and push all this and we deploy our application in ArgoCD to trigger the synchronization of our Chart in the cluster:

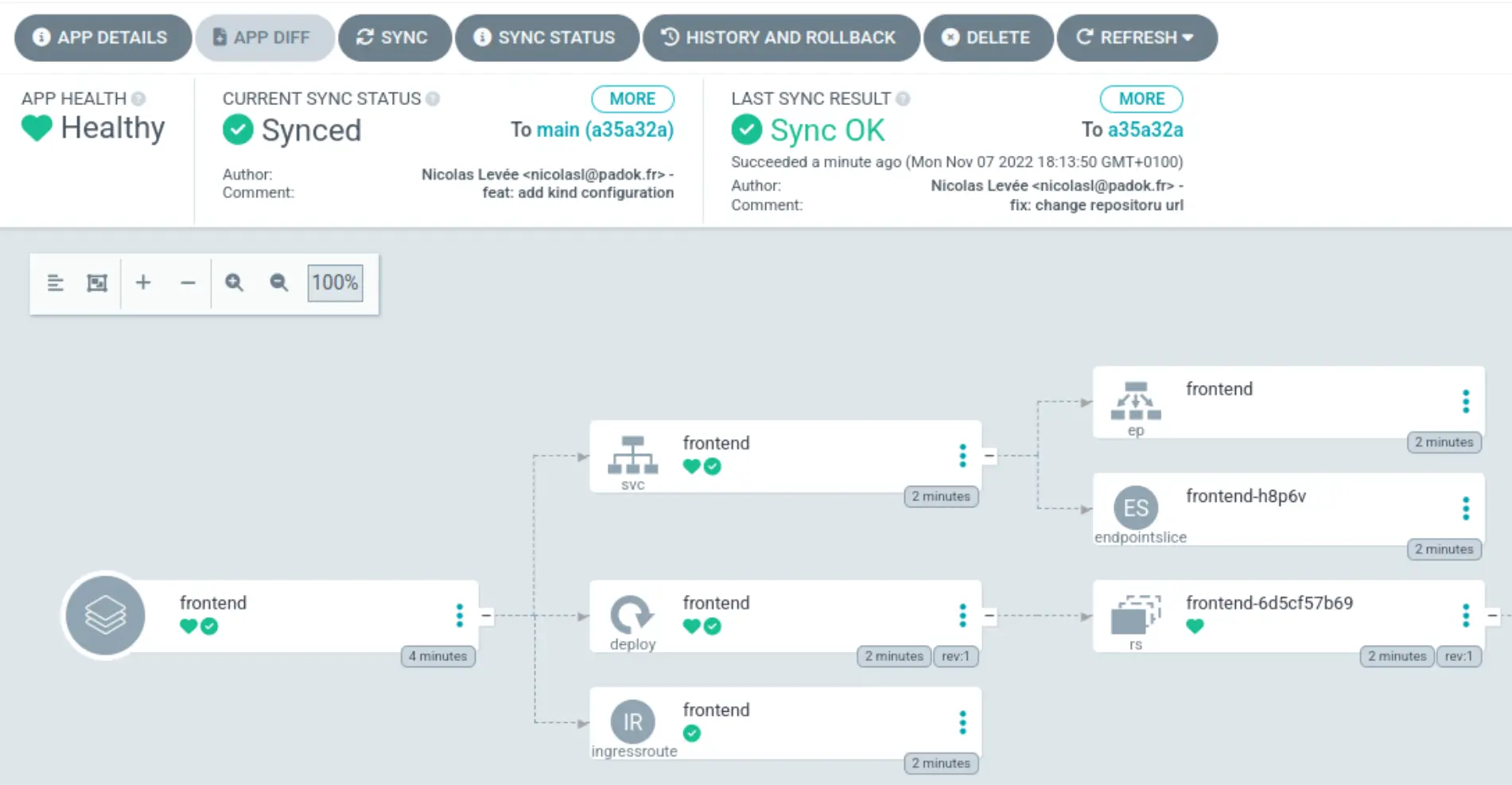

kubectl apply -n argocd -f argocd/frontend.yamlThe application is normally available via the host that you have defined in the values file of the Helm Chart and also in the interface of ArgoCD.

The application is well deployed, but the routing is still based on the hostname. We will now add an application and route the requests with the x-frontend-env header to this new application. To do this, we will deploy the second application frontend-dev.

The frontend-dev application will use the values-dev.yaml file of the Helm Chart: this file adds a rule based on the x-frontend-dev header for routing: Headers(x-frontend-env, dev). Once the Helm Chart is built the IngressRoute should look like this:

apiVersion: traefik.containo.us/v1alpha1

kind: IngressRoute

metadata:

name: frontend-dev

labels:

helm.sh/chart: frontend-0.1.0

app.kubernetes.io/name: frontend

app.kubernetes.io/instance: frontend-dev

app.kubernetes.io/version: "1.16.0"

app.kubernetes.io/managed-by: Helm

annotations:

kubernetes.io/ingress.class: "traefik"

spec:

entryPoints:

- web

- websecure

routes:

- kind: Rule

match: "Host(`frontend.vcap.me`) && (Headers(`x-frontend-env`, `dev`))"

services:

- name: frontend-dev

port: 80All that's left to do is to test. We start by deploying the application:

kubectl apply -n argocd -f argocd/frontend-dev.yamlWe can now test to see if the routing is done correctly with a simple curl command:

$ curl -s -H "X-Frontend-Env: dev" http://frontend.vcap.me/api | jq .hostname

"frontend-dev-784d84854c-bkszs"

$ curl -s http://frontend.vcap.me/api | jq .hostname

"frontend-6d5cf57b69-pjnjr"The returns of the command show clearly that depending on the headers sent in the request, we do not fall on the same version of the application (not the same pod).

To go further, we could automate this workflow with ApplicationSet. This resource allows us to trigger the creation of an application from several sources. For example from git branch pattern...

Conclusion

There are several advantages to using this type of routing:

- Backend and Frontend are interdependent: on a 2/3 tier application, it can be interesting to deploy a frontend feature to test it without having to deploy a new backend.

- No possibility to do wildcard/certificate management: in some situations, it can be complicated to have wildcard certificates (for example on Google Cloud HTTPS LB) or to have an unlimited number of certificates to do routing by hostname.

- DevX: simplify feature tests during dev directly on the production environment

- Reduce costs: you don't have to redeploy everything when only a part of the application is being tested.